“This level of insight isn’t possible with ICD-10 codes alone. To move beyond surface-level data, you need LLMs trained to decode clinical nuance. Our models are trained on proprietary de-identified clinical data and optimized for real-world context—enabling us to deliver intelligence beyond what’s possible with structured fields alone.“

Hunter Nance

VP of Engineering, egnite

At egnite, we’re building a smarter future for cardiovascular care—one where technology doesn’t just support clinicians, it empowers them. A key part of that mission? Turning data into insight—especially the complex, messy, unstructured kind buried deep in clinical notes and echo reports.

Nowhere is this more critical than in rare cardiovascular conditions like cardiac amyloidosis, a progressive and underdiagnosed disease where early detection can be the difference between delayed intervention and targeted care.

Thanks to advances in large language models (LLMs), what was once locked away in free text can now be translated into intelligence at scale to power research. We sat down with Hunter Nance, VP of Engineering at egnite and one of the minds behind our AI efforts. Here we talk about how egnite is using technology – in some cases, technology that wasn’t available even a year ago – to move beyond the constraints of structured data.

Let’s start with the big picture — what changed in the LLM world that opened new possibilities for egnite and the healthcare tech field?

The short answer is computing power. Large language models have been around for many years, but it’s only recently that they’ve been powerful enough to apply to a broader set of business and clinical use cases. The release of ChatGPT in late 2022 is what brought the concept into the larger public purview. For the first time, a truly sophisticated language AI became easily accessible, with the ability to hold conversations and answer complex questions. In many ways this set off a chain reaction in the tech world. At the same time, we started to see multiple competing open source LLMs emerge that anyone could experiment with.

These new developments led to sophisticated tools and techniques which enabled “fine-tuning” of these language models. We can take a very generic model with a broad knowledge base and feed it very specific data, such as de-identified clinical reports, which then focuses the model on particular topics of research interest. This fine-tuning capability has been the biggest driver of egnite’s success with this technology.

Can you explain, in simple terms, how we use LLMs today at egnite?

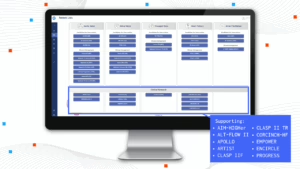

egnite’s current focus for these models is harnessing them to read, understand, and extract insights from unstructured clinical text to facilitate research. With each of our solutions, the LLM is only one piece of the puzzle—albeit a significant one. LLMs have a few pitfalls, namely around hallucinations, which is when the model outputs something that is inaccurate or completely made up. For instance, an LLM may read-through a patient’s history and notice they have a few minor symptoms and high cholesterol and try to draw a conclusion that the patient has heart disease, even though there is no explicit documentation of this. There’s a lot of complexity involved in getting that under control. We needed to develop a comprehensive verification and citation platform that analyzes every extracted output and ensures that each piece of information can be traced back to its exact origin.

Further, running these language models is extremely computationally expensive and slow. If you’ve used ChatGPT, you’ve likely seen it generating responses in real time, word-by-word. This presents a challenge at scale, processing millions of records would take years. To address this, egnite began developing a pre-processing layer that breaks text into more digestible chunks using smaller language models, then feeds it into a parallel processing system which can process hundreds of records per second.

Our most recent use case involves cardiac amyloidosis, which is a heart condition that’s notoriously tricky to catch. It’s relatively rare and often underdiagnosed because its signs can masquerade as other common heart issues. This level of insight isn’t possible with ICD-10 codes alone. To move beyond surface-level data, you need LLMs trained to decode clinical nuance. Our models are trained on proprietary de-identified clinical data and optimized for real-world context—enabling us to deliver intelligence beyond what’s possible with structured fields alone.

Would you walk us through the cardiac amyloidosis project and how the AI works from a high level?

First, our solution scans a set of medical records, reviewing all sources of physician narratives–such as clinic visit notes, report findings, and echocardiogram reports. It then identifies potential references to cardiac amyloidosis. The next step is determining the context—what is actually being said. Is the doctor saying the patient has a confirmed diagnosis? Or are they mentioning it as a possibility, such as “cardiac amyloidosis suspected, awaiting test results”? Or is it being clarified that the patient explicitly does not have it? Our model classifies each mention into one of three categories: “Confirmed Diagnosis,” “Suspected/Under Evaluation,” or “Not Present.” This allows us to surface patients who have this condition or may have it, based on what their doctors have documented in plain language.

To put this into perspective, before LLMs, it was nearly impossible to reliably extract this insight at scale. Simple keyword searches would misfire, for example, they might find both “cardiac” and “amyloidosis” in the same note, even if the terms referred to unrelated conditions or were part of family history, instead of the patient at hand. Now, these models can read the notes with human-like comprehension and with our additional fine-tuning, they can understand subtle, disease-specific clinical nuances. This is arguably one of the toughest language challenges we’ve tackled to date.

What types of clinical data are we unlocking now that we couldn’t before?

The volume and complexity of data in each patient’s record is skyrocketing. Our model’s main use case focuses on unlocking unstructured data—such as physician notes, reports, and other free-text entries from the EMR-for research insights. Traditional structured data like diagnosis codes, procedures, and medications are all valuable, but gaining insight into the raw, unstructured data behind the scenes is really what unlocks a holistic view of the disease.

However, as mentioned, there are many challenges to overcome with this type of data. Linguistic challenges, as the notes are incredibly long and full of advanced medical terminology, and every clinician phrases things a bit differently. And of course, technological challenges, such as handling hundreds of different formats, setting up the proper guardrails to address hallucinations, and maintaining adequate architecture to process billions of records.

What makes applying LLMs in healthcare — and specifically cardiology — uniquely challenging?

The biggest challenge with LLMs in healthcare is the risk of hallucinated output. This is, by far, the area we have spent the most time and effort addressing, combining multiple layers of technology to ensure everything our model produces is directly citable and transparent. When it comes to cardiology specifically, most of these models are trained on massive datasets sourced from the internet, textbooks, and other various open and closed datasets. Similar to how humans learn, repetition matters. These models see millions of examples of common diseases and ailments, but these rare diseases have much less data available for these models to learn from. This is where the fine-tuning I mentioned earlier comes into play. By feeding in thousands of focused examples, you can steer the model to pull from a more targeted knowledge base.

What excites you most about what’s coming next in this space?

We are just scratching the surface of what this technology can do for healthcare. The world is rapidly approaching a point where these models could learn to help clinicians anticipate what’s coming next. AI will eventually unlock hidden insights buried deep within a patient’s chart, allowing physicians to intervene earlier, preventing conditions from progressing in severity. In addition, breakthroughs in this technology may allow for more personalized care in the future. LLMs will be able to summarize decades of patient history into a perfectly crafted and curated narrative to assist the treating doctor.

What makes egnite uniquely positioned to lead this type of innovation – and why does this work matter now more than ever?

Beyond our deep cardiovascular domain expertise, our team consists of top-tier engineers, analysts, and data scientists that focus on this kind of innovation. We also have access to one of the largest ecosystems of real-world cardiovascular data in the US that is updated daily, enabling us to test and refine our algorithms.

Cardiac amyloidosis is just the start. We are at a pivotal moment where our population is aging and the healthcare system is facing increasing strain. There is a pressing need for intelligent, data-driven solutions that can drive research forward at a scale not previously possible. AI has finally matured to the point where we can begin delivering on that promise.

The opportunity to redefine how we approach cardiovascular disease has never been clearer.

__

egnite’s data Registry delivers a comprehensive view of patient populations. If you’d like to talk to explore how you can leverage RWD within your organization, please reach out to christopher.edie@egnitehealth.com.

Bio

Hunter Nance is the VP of Engineering at egnite, Inc., a leading Cardiovascular Digital Healthcare company partnered with over 55 heart programs and 400 affiliated healthcare facilities nationally.